Scaling AI solutions for large datasets is one of the most significant challenges in modern technology. With the growing availability of data across industries such as healthcare, finance, and e-commerce, AI applications need to manage and process increasingly large datasets. However, handling such massive data volumes isn’t as simple as adding more hardware or increasing storage. Instead, it requires a combination of sophisticated algorithms, optimized infrastructure, and strategic resource management.

The goal of scaling AI for large datasets isn’t just about increasing capacity. It’s about maintaining performance, reducing latency, and ensuring that AI models can deliver accurate predictions and insights without becoming overwhelmed by the size or complexity of the data.

In this article, we’ll explore the challenges, solutions, and emerging trends in scaling AI for large datasets. Whether you’re building a system to handle real-time data streams or optimizing predictive models that rely on historical data, understanding how to scale AI solutions effectively is critical.

Understanding the Importance of Large Datasets

Large datasets are the foundation of modern AI. The more data an AI model has to learn from, the more accurate its predictions tend to be. In fields such as machine learning and deep learning, models rely on massive amounts of data to recognize patterns, predict outcomes, and make decisions.

However, large datasets pose unique challenges. The computational power required to process these datasets can be immense. Moreover, the speed at which data is growing outpaces traditional data storage and processing capabilities. This is why scaling AI solutions is not just a matter of efficiency; it’s a necessity for organizations looking to harness the full power of their data.

The key to effective AI solutions lies in the ability to handle data at scale, enabling better decision-making, enhancing customer experiences, and driving innovation.

Challenges in Managing Large Datasets

Scaling AI solutions for large datasets introduces several significant challenges:

- Storage Requirements: As datasets grow, they demand more storage space. This issue is compounded when dealing with high-resolution images, video data, or complex data formats.

- Computational Costs: Processing large datasets requires substantial computational power. Traditional single-processor machines are insufficient for AI workloads, necessitating more advanced hardware like GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units).

- Data Latency: Large datasets can slow down AI processes, especially in real-time applications. Latency affects how quickly models can make predictions, which is critical in industries such as finance or healthcare.

- Data Integrity and Quality: Handling large volumes of data increases the risk of errors, such as missing or inconsistent data points. Ensuring data integrity and quality is vital for accurate AI outcomes.

Despite these challenges, advancements in computing infrastructure and AI algorithms make it possible to manage and scale AI solutions for large datasets efficiently.

Why Scaling AI Solutions is Crucial

Scaling AI solutions isn’t just about coping with the volume of data—it’s about leveraging that data to unlock new capabilities. For instance, industries such as healthcare depend on large datasets to improve diagnosis accuracy and treatment personalization. Similarly, e-commerce platforms use large datasets to refine recommendation algorithms, offering personalized product suggestions based on vast amounts of customer data.

Without scaling, AI models would be unable to function effectively, leading to inefficiencies, poor performance, and missed opportunities. Scalable AI solutions allow businesses to capitalize on the increasing availability of data, transforming insights into tangible results.

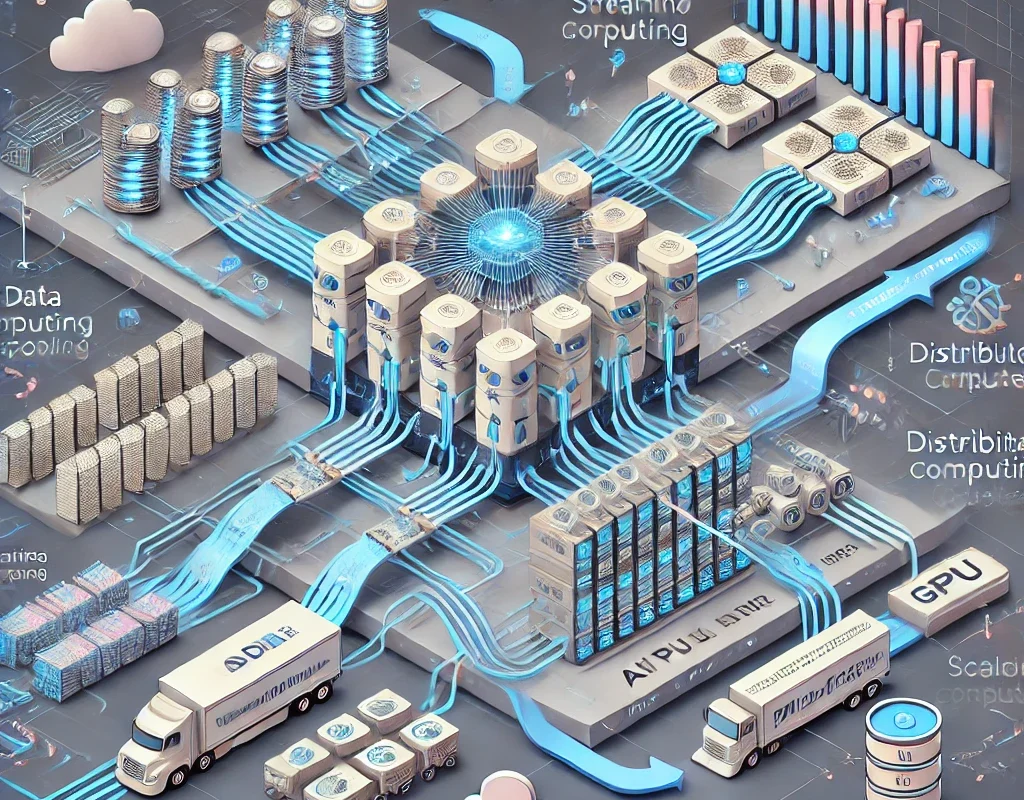

Key Technologies for Scaling AI

Several key technologies support the scaling of AI solutions for large datasets. These include:

- Distributed Computing: Distributing tasks across multiple machines allows AI models to process large datasets more quickly by leveraging the combined power of several processors.

- Cloud Computing: Platforms such as AWS, Google Cloud, and Microsoft Azure provide scalable environments where AI workloads can grow dynamically, adjusting to the size and complexity of the data.

- Parallel Processing: Breaking down tasks so they can be processed simultaneously increases the efficiency of AI computations, especially when dealing with large datasets.

- AutoML (Automated Machine Learning): AutoML tools simplify the AI development process, making it easier to scale solutions without needing a large team of data scientists.

By integrating these technologies, organizations can build AI solutions that scale seamlessly, handling ever-growing datasets without compromising performance.

Infrastructure Requirements

Scaling AI for large datasets requires specific infrastructure considerations. These include:

- High-performance Computing (HPC): AI models, particularly those in deep learning, require immense processing power. HPC clusters equipped with GPUs and TPUs are essential for scaling solutions.

- Networking Capabilities: Large datasets require robust networking to transfer data efficiently between storage and processing units. Low-latency, high-throughput networks ensure smooth operations.

- Data Storage Solutions: Scalable storage systems that can expand with data growth, such as cloud storage or distributed file systems, are vital.

Organizations must invest in the right infrastructure to ensure that their AI solutions can scale effectively, offering high performance and reliability at every stage of data processing.

AI Model Complexity and Large Datasets

As AI models become more complex, particularly in fields like deep learning, they require larger datasets to train effectively. Complex models have more parameters, and the larger the dataset, the more accurately these parameters can be tuned.

However, training such models with large datasets requires significant computational resources and time. Techniques such as distributed training, where the model is trained across multiple machines simultaneously, can alleviate some of this burden.

Moreover, scaling AI solutions for complex models often requires specialized hardware, like GPUs or TPUs, to handle the heavy computational loads. Without scaling, training times can stretch from hours to days or even weeks, severely limiting the usefulness of the model.

Optimizing Data Pipelines

A crucial aspect of scaling AI solutions is the optimization of data pipelines. A data pipeline encompasses all the steps involved in collecting, processing, and storing data for AI models. When dealing with large datasets, inefficient pipelines can become bottlenecks that slow down the entire system.

Optimizing pipelines involves:

- Automation: Automating data collection, cleaning, and preprocessing to reduce the time and effort involved.

- Parallel Processing: Allowing different parts of the pipeline to run simultaneously rather than sequentially.

- Streamlining Data Flows: Ensuring that data moves smoothly from one stage of the pipeline to the next without unnecessary delays.

Efficient pipelines are essential for ensuring that AI solutions can scale without being bogged down by slow data processing.

Distributed Computing and AI

Distributed computing plays a critical role in scaling AI solutions for large datasets. By dividing computational tasks among multiple machines, organizations can handle datasets that would otherwise be too large for a single system.

Key advantages of distributed computing for AI include:

- Faster Processing: Distributing tasks speeds up the computation time, enabling AI models to process large datasets more efficiently.

- Scalability: Distributed systems can grow to handle increasing amounts of data without requiring significant reconfiguration.

- Cost-Effectiveness: By utilizing cloud-based distributed systems, organizations can scale their AI solutions dynamically, paying only for the resources they use.

For AI solutions that need to process vast amounts of data in real-time, distributed computing offers a scalable and cost-effective solution.

You can also read; How to Optimize Hyperparameters in Machine Learning Models

Cloud Computing for AI Scaling

Cloud computing is one of the most powerful tools for scaling AI solutions. Cloud platforms like Amazon Web Services (AWS), Google Cloud, and Microsoft Azure offer virtually unlimited resources that can be scaled up or down as needed.

Cloud computing benefits AI scaling in several ways:

- Elastic Resources: Cloud platforms allow organizations to scale resources up or down based on demand. This flexibility is essential when dealing with large datasets, which may require fluctuating levels of processing power.

- Cost Efficiency: Cloud providers offer a pay-as-you-go model, meaning organizations only pay for the resources they use. This is particularly beneficial for AI workloads, which may require massive resources during training but much less during inference.

- Integration with AI Tools: Many cloud platforms offer pre-built AI tools and frameworks, simplifying the process of scaling AI solutions.

Cloud computing has become the backbone of modern AI, enabling organizations to scale their solutions rapidly and efficiently.